How AI slop undermines our understanding of reality

Biblical floods in Spain provide an object lesson in what people refuse to believe

Earlier this week the Spanish region of Valencia experienced dramatic amounts of rain. Actually, “dramatic” hardly does it justice. A year’s worth of rain fell in a single day. How much was that? About 40cm, or close to 16 inches. Bear in mind that when one says “an inch of rain fell”, that means that enough rain fell over the specified area to cover it all to that depth. So when we say that parts of Valencia received 40cm of rain, any part which didn’t, for whatever reason, retain that depth of water on it means that some other part has to instead. And if there’s any sort of slope around, that water will try to run down it, obeying the insistent call of gravity.

The New York Times reported:

Though storms are typical during the fall [autumn] in Spain, local residents were shocked at the sheer amount of rain: more than 70 gallons per square yard in some villages. In the village of Chiva, more than 100 gallons per square yard of rain fell in eight hours, practically a year’s worth, Spain’s meteorological agency said.

The agency added that it expected some 40 gallons per square yard of rain before 6 p.m. local time on Wednesday over parts of Valencia, Andalusia and Murcia. The storm was moving toward the north and northwest of Spain, with rain expected to continue until at least Thursday.

As noted above, when you have that amount of water, any sort of gradient becomes an irresistible force. Water is also very heavy, at a kilogram per litre, so at 100 gallons per square yard (or, as the metric-based Spanish meteorological agency actually said, 490 litres per square metre) it only takes about three square metres’ worth of rainfall to have the same weight as a car. Put it at the top of a hill and set it in motion, and you have something that will easily move a car. Get a large area of that amount of water, channel it down something narrow such as a street hemmed in by buildings where there are cars parked along the side, and it will pick them up and drop them just anywhere.

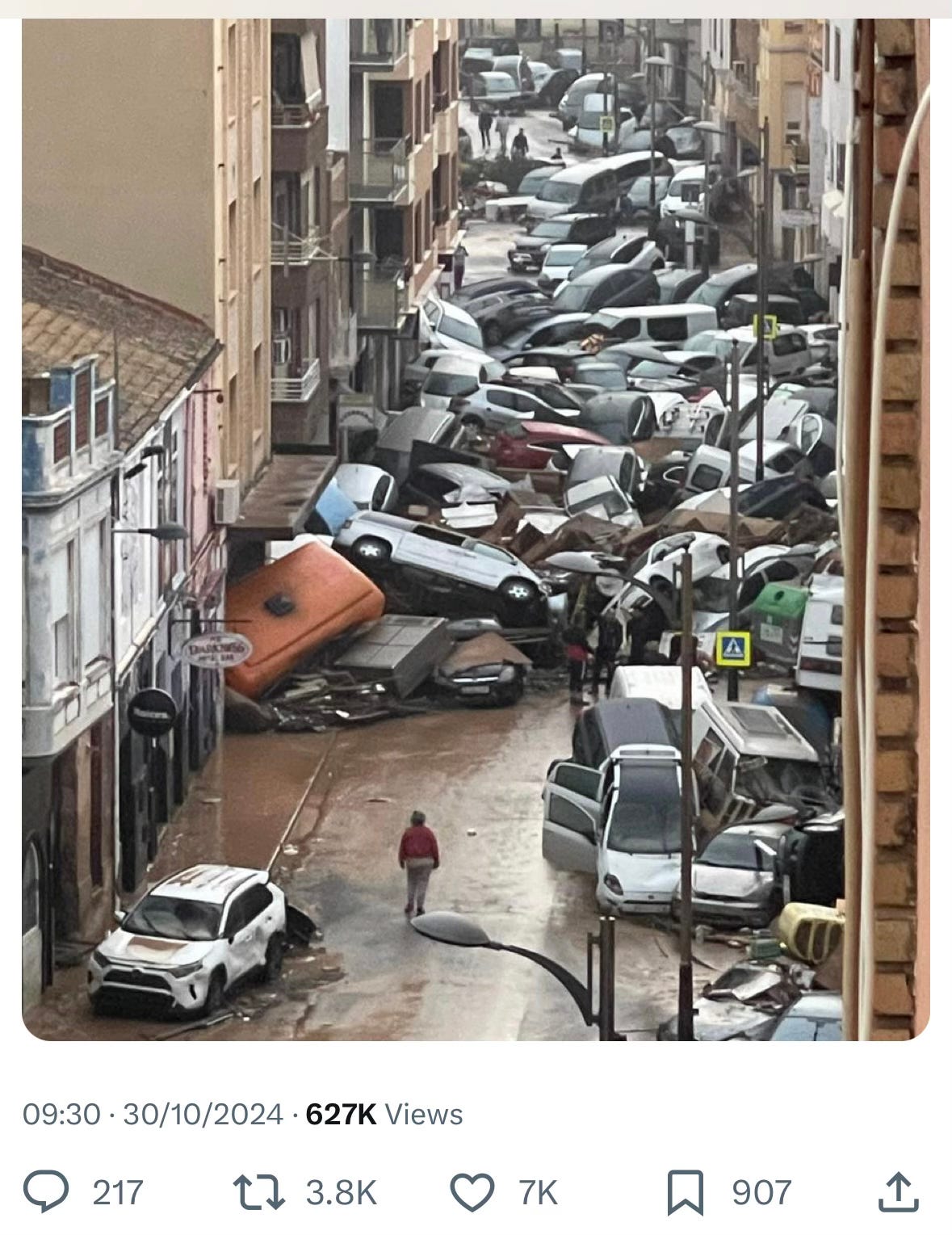

And once the floodwaters have passed, you will have some utterly dramatic images. Such as this one:

So guess what the first response was?

One’s first reaction is: urgggggggh. People have died, people are suffering, people’s houses and pets and livelihoods have been swept away, and you’re denying the reality of a photograph depicting how climate change is screwing with people’s lives.

The second reaction is: this is how AI degrades our experiences. When people see that you can create reasonable-looking pictures of disasters using AI content generators, they are inclined to think that real disasters aren’t happening—especially those which are happening far away, to people they’re not in contact with, caused by processes they don’t want to believe will affect them. People hate the idea that climate change is going to affect them. It’s too big to grasp, too hard to think of how to tackle it; easier, much easier, to deny it’s happening. And that includes denying that enormous floods could pick up cars like they were matchsticks and sweep them along a road.

This wasn’t the only person to claim that that photo wasn’t real:

It’s not obvious why these people thought that photo in particular wasn’t real, but the other ones were genuine. Perhaps it’s something about the sheen of the cars and the peculiar roundedness of the shapes, and maybe the lack of obvious damage.

Again, the problem this illustrates is how people are now predisposed not to believe things that are real. It’s not that we can’t tell the difference; it’s that it’s easier not to bother to find out.

Fortunately, in the case of that photo, we can find out.

Look back up to the original, and you can see there’s a sign just outside whatever the large orange thing is. In slightly better quality photos, you can see that it say “Pub DARKNESS Metal Bar”.

So we go and look up “Pub Darkness Metal Bar”, and get this Facebook page which gives the address: Avda. Gomez Ferrer Nº 52, Sedaví, Spain, 46910.

Apple Maps gives us a ground view:

Rotate the view, and look up the street:

It’s a match—note how the building beside the pub is painted pink, and the one beyond it is a light ochre, then dark brown jutting out, and so on. There’s also the fluorescent pedestrian crossing sign on the opposite side of the road.

And look at all those lovely cars just waiting to be swept away by a flood of barely imaginable proportions. The photograph—which appeared on the Associated Press feed, I think—was simply taken from a higher vantage point. Looking back up the street, there’s an apartment block which probably offered that position.

Stick a telephoto lens on, and allowing for the foreshortening, you’ve got your picture. (Incidentally, the Volcaholic account on X is excellent for photos and videos of all the exceptional weather incidents around the world, while also doing straightforward fact-checking.)

We can conclude: that photo isn’t AI-generated. You can’t get an AI system to generate photos of an existing location; it’s just not possible given the current state of the art.

Only now with the advent of more and more images and video from the ground are we discovering the extent of the damage, which is colossal. Huge areas are underwater.

But the way that some people have lost trust in reality isn’t good to see. Peculiarly, it’s the opposite reaction, yet just as problematic, as the American politician who shared an AI-generated picture purporting to show a girl in a post-hurricane flood, and then insisted that “I don’t know where this photo came from and honestly it doesn’t matter”. Misinformation for thee, but not for me, eh.

The problem where people trust things that aren’t real even though an AI generated them, and dismiss things that are real because an AI might have generated them, is going to get worse. Meta is going to inject more AI-generated content into user feeds on Facebook, Instagram and Threads, because apparently humans just aren’t productive enough when it comes to generating Stuff to put around the adverts. Google’s AI Search (“with added Gemini!”) with AI-generated answers to your queries so those damned humans and their websites become an afterthought you don’t have to click through to.

Meanwhile, a group of web publishers went to Google earlier this week to point out, more or less gently, that they’d been unfairly downranked in the latest search update, and that it was destroying their business. Live by the Google ranking, die by the Google ranking, but one of the stunning revelations was that Google doesn’t seem to know quite how things rise up and down its rankings, and moreover that it can’t tell the difference between a longstanding human-written site and a new AI slop site. The idea that there isn’t some sort of longevity measure for sites is properly astonishing.

This is how you really undermine trust in what you’re reading: when the biggest search engine in the world replaces human work with AI-generated summaries (which may or may not be accurate, just like search results), and ignores human work in favour of AI content. We’re not even at the point where AI content is overwhelming us to fascination; we’re at an earlier point where it just undermines our trust in what we’re seeing.

But the good thing is this: we can still check stuff. We can still find out for ourselves. Sometimes it feels like defending outposts against a horde of invaders whose modus operandi is to feed on indifference, rather like the people drugged on soma in Huxley’s Brave New World. So we have to not be indifferent.

• You can buy Social Warming in paperback, hardback or ebook via One World Publications, or order it through your friendly local bookstore. Or listen to me read it on Audible.

You could also sign up for The Overspill, a daily list of links with short extracts and brief commentary on things I find interesting in tech, science, medicine, politics and any other topic that takes my fancy.

• I’m the proposed Class Representative for a lawsuit against Google in the UK on behalf of publishers. If you sold open display ads in the UK after 2014, you might be a member of the class. Read more at Googleadclaim.co.uk. (Or see the press release.)

• Back next week! Or leave a comment here, or in the Substack chat, or Substack Notes, or write it in a letter and put it in a bottle so that The Police write a song about it after it falls through a wormhole and goes back in time.

What a great article….i have to say I find your observations very insightful. I look forward to next week’s article.

"It's AI generated" seems to be the updated version of "It's Photoshopped".

https://www.explainxkcd.com/wiki/index.php/331:_Photoshops

Oh woe fell upon us back then, how could we ever believe an image again, when - *gasp* - wait until you hear this - the power to create false images became so widely available. Any troll in their basement could make a passable fake photograph, where previously that was a highly professional task. Surely this meant the fall of Truth, as nobody would ever be able to trust anything they did not see themselves.

Then people grew up with it, and it stopped being ScaryTech.