They looked from Google to OpenAI, and back again, and couldn't tell them apart

Bad things happen when hubristic aims collide at the midpoint of ambitions

Operational note: due to holidays, the next edition will be on Friday June 21. (Or perhaps June 14 if I come back inspired enough from the holidays.)

The election is going so well, isn’t it? As forecast last week, we’re already seeing both dark ads on Facebook (best followed via Who Targets Me, visible here on “Full Disclosure”) and people being deselected for their social media history. (OK, only one person so far, but it’s early days.) And yes, journalists are living it up on the social network we (journalists) all love to hate to love.

There are five weeks still to go until the post-election morning, and the memes are piling up rapidly. I think, so far, my favourite is the TikTok response to the Conservative announcement of their “we’ll introduce National Service but in a form that’s not actually useful to anyone” policy:

Since seeing it, I can’t imagine Rishi Sunak as anything other than Shrek’s Prince Farquaad—the laughably incompetent, unbearably entitled, angry little man trying in vain to get something done. (Image via Tom Hamilton, who got it via Tom Harwood.)

But let’s put the memes aside. Instead, I wanted to look at something far more concerning: the coming collision between Google, formerly known for its search engine but increasingly seeking to be known for its chatbot, and OpenAI, formerly known for its chatbot but increasingly seeking to be known for its search.

Yes: the two are on a collision course because each is envious of what the other has. Google has a huge successful brand name and site that everyone comes to when they want to find information. OpenAI has a quite big brand and a site that quite a lot of people come to when they want a computer to pretend to be intelligent and knowing, because OpenAI’s site is powered by artificial intelligence (AI).

This, to Google, is a drastic upset to the natural order of things, particularly because it has been talking up its capabilities in AI for years. As far back as May 2018 it showed off an Assistant program called Duplex which, in the demo, would call a hairdresser and book an appointment for you using a computer-generated, but natural-sounding, voice. Six years ago! Voices! Talking! On the phone! (Suspicions were raised about that 2018 demo, which was suspiciously straightforward.)

Duplex expanded very quietly from a 2019 launch, but is now—it seems—available in 49 US states (guess the exception!1) and 16 countries, including the UK.

It’s an AI chatbot! Talking to humans! And yet, one must imagine some Google engineers fuming, nobody is recognising their fantastic work. There’s a reason for this: Google has been remarkably quiet about Duplex. Can you recall an advert for it? Or one of the executives talking it up in an interview? I couldn’t find any examples. Duplex is an artificially intelligent rock thrown into a pond, whose ripples Google seems to have been very keen to minimise.

So there’s Google, strutting along, internally confident of its machine learning capabilities, especially with the incorporation of DeepMind and its deep learning systems. And then up pops OpenAI, which in 2020 announces the chatbot GPT-3, in 2021 the DALL-E image generator, and then in December 2022 lets everyone have a go at ChatGPT—which basically sets the tech world on fire, getting a million signups in five days, and lots and lots of attention.

One can only imagine the panic in the upper floors of Google HQ. Plus that simmering resentment: why aren’t we recognised for all our AI stuff? Cue a scramble to get chatbots into everything, including of course Google Search. According to those who were there, it’s been reminiscent of the scramble in 2010 to make Google+ happen as a social network to compete with Facebook, despite there being absolutely no need for Google to compete with Facebook. (Google+ was launched in 2011 and closed, somewhat shamefacedly, in 2019, having long since withered.)

Fast forward from 2020—when Google realised that OpenAI and DALL-E and ChatGPT looked like compelling offerings—to now.

OpenAI is being sued by the New York Times for producing output which looks suspiciously like unadulterated NYT content. About 5% of the online population say they have “used generative AI to get the latest news”. The same study (by the Reuters Institute) found that ChatGPT is two to three times more used than Google Gemini and Microsoft’s Copilot (which is also OpenAI).

And, get this, about 24% say they’ve used generative AI for “getting information”. If you’re Google, you see data like that and you freak out. People are asking ChatGPT for information?? Are they mad?? That’s Google’s job!

Introducing… Google AI Overview. By now you should have heard about it. Essentially, Google’s large language model (LLM) considers the search terms you’ve put in, tries to discern what question you’re asking, feeds that into the LLM, and serves up the answer.

The effect is disastrous. There have been plentiful stories of how AI Overview has served up advice to eat just one rock a day, to put glue on your cheesy pizza to make it stick, and so on. The reason why this is wrong is because the users are human. As John Hermann points out at NY Mag,

It’s easy to see, from Google’s perspective, how automating more of the search process is incredibly obvious: Initially, it could scrape, gather, and sort; now, with its powerful artificial-intelligence tools, it can read, interpret, and summarize. But Google seems manifestly unable to see its products from the perspective of its users, who actually deal with the mess of using them and for whom ignoring a link to The Onion (the “eat rocks” result) or to an old joke Reddit thread (the “eat glue” result) is obvious, done without thought, and also the result of years of living in, learning from, and adapting to the actual web. AI Overviews suggest a level of confidence by Google not just in its new software for extracting and summarizing information but in its ability to suss out what its users are asking in the first place and in the ability of its much older underlying search product to contain and surface the right information in the first place.

Meanwhile, coming from the other direction, OpenAI is doing deals with multiple outlets, and the latest—with Vox Media (owners of tech site The Verge) and The Atlantic will include the condition that “content from Vox Media — including articles from The Verge, Vox, New York Magazine, Eater, SBNation, and their archives — and The Atlantic will get attribution links when it’s cited.”

When it’s cited? What this says to me is that, when considered along with all the other deals OpenAI is doing with news publishers, that it’s aiming to become a sort of search engine.

So here we are: Google is trying to turn itself into a single-answer chatbot, and the single-answer chatbot is trying to turn itself into a search engine. They collide in the middle. Then what?

Then: nothing good. Too few people have grasped that ChatGPT and all of the LLMs are not search engines, and they are not intelligences. Do not use them to settle factual disputes. Do not use them to make pleadings in court cases. They are stochastic parrots: a parrot doesn’t know what the noises it makes mean, and the LLM just uses likelihood to decide what word to output next. You can get pretty close to sense by this method if your word-prediction machine has been trained on the words that have appeared on the internet.

To repeat: there’s no intelligence there. There’s no inspirational genius in there either. The LLM can’t write a novel: it doesn’t understand character, plot, arc. You can expand how many sentences the parrot can say, but you can’t make it understand.

The danger is that Google is turning something that was simple, examinable and trusted—the ten blue links—into something that is complex, uninterrogable and untrustworthy. Chatbots have their uses: for summarising limited amounts of information that you feed them, for suggesting ideas when you’re out of them, for organising data you feed them. But Google has been led astray by the idea that it knows what you’re after when you enter some search terms—as Hermann points out, humans are far more complicated than that. (And 15% of search queries each day have never been seen before, per Google, which amounts to about 500 million pages served to a brand new question.)

OpenAI, meanwhile, is coming at this from the other end: hubris, but of a different form. It thinks its magical tool can be applied to anything—including search. Wrong.

The problem is that the internet has shown us over the years the simple truth: there’s no single answer to your question. It depends on what you wanted to know. Did you want to find that Onion article from years ago? Or maybe you really did wonder if rocks were good? Or how to make your cheese stick to the pizza? The machine can’t understand human intent. Google’s own blog from 2012 quotes a Larry Page desire that he expressed much, much earlier in the company’s existence:

Larry Page once described the perfect search engine as understanding exactly what you mean and giving you back exactly what you want.

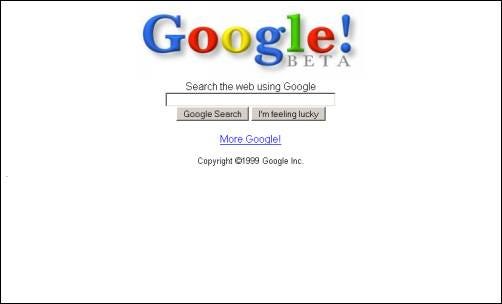

Well, I’ll describe the perfect engine as being one that runs forever on zero fuel. Guess what! That can’t exist either. Both Google and OpenAI are in pursuit of the impossible, and they can’t get closer to it either. Humans understand that. We see a page of search results, and we peruse them looking for the one we trust above the others, or whose topic matches what we actually wanted to ask. (This is, it should be said, getting harder as more pages are filled with AI slop and then SEO’d to hell and back.) I’d love to know how many people, these days, hit the “I’m Feeling Lucky” button. (An estimate a few years ago was that it’s 1% of searches, but Google doesn’t seem to have commented officially.) I bet it’s only there for decoration these days.

The concerning thing is that both companies seem locked into these two trajectories. That’s bad for search, and bad for the web, because of the two, it’s far more consequential if Google runs down a cul-de-sac marked “AI Overview”. OpenAI can and will pivot away from it—there are other strings to its bow, such as the video generator Sora. Google, however, might struggle to undo the bad that it does with its move to single-answer search. It’s already rolling up the answers to lots of questions into glanceable lines that you can bounce off without ever going to a linked site.

That, in turn, could have much bigger knock-on effects. The research group Gartner predicts that search volumes will fall 25% by 2026 because of LLM use. The creative media group Raptive suggests that could cost websites $2bn in lost visits, with some losing two-thirds of their traffic. It’s a frankly terrifying prospect for lots of media, and there’s nothing they can do about it except to reduce their reliance on Google—while at the same time not really being able to deny Google access to their site because that would mean a downranking, and any ranking is better than none.

There’s no reassuring answer here. Google might reverse course, as it did with Google+, but that could well take years, and during that time the damage will be done. We’ve passed peak TV, when the money was pouring into streaming services; now that’s in reverse, and the streaming services are cutting back. Perhaps we’ve also passed peak web—or peak commercial web—and it’s going to slide backwards towards a status quo ante that we didn’t realise was the status quo at the time.

Maybe I should ask Google’s AI Overview. What do you think it would say?

• You can buy Social Warming in paperback, hardback or ebook via One World Publications, or order it through your friendly local bookstore. Or listen to me read it on Audible.

You could also sign up for The Overspill, a daily list of links with short extracts and brief commentary on things I find interesting in tech, science, medicine, politics and any other topic that takes my fancy.

• Back in two or possibly three weeks! Or leave a comment here, or in the Substack chat, or Substack Notes, or write it in a letter and put it in a bottle so that The Police write a song about it after it falls through a wormhole and goes back in time.

Louisiana. No idea why. Explanations welcome.

I feel that Google providing top-of-page results which people look at and say “huh??” is a bigger threat to its monopoly than a rival AI answer system which can never match it for scale. When you debase your own brand it’s a far bigger problem than when someone external does so.

The Google AI illustration stuff was not a problem because it was only apparent to a tiny number of people. The AI Overview stuff is much worse - as evidenced by Google realising this and dialling back on it, as was announced on Friday.

There may be some "pundit extrapolation" problems here. New product launches can be full of glitches, and that often leads to immediate articles about a bug or oddity. And it's not clear if the types of issues which fascinate educated-professional writers (who are highly unrepresentative of the general population) matter too much for a general business case. Google knows very well that AI answers could be a threat to its search near-monopoly. This is true even if a rival AI answer system produces results that the chattering class finds to be absolute outrage-fodder (see the continued survival of Twitter/X, or for that matter, the ongoing political career of Donald Trump). This is obviously a rushed launch, but I suspect the reasons for that are internally valid for Google.